Introduction to AI

AI (Artificial Intelligence) is a machine’s ability to perform cognitive functions as humans do, such as perceiving, learning, reasoning, and solving problems. The benchmark for AI is the human level concerning teams of reasoning, speech, and vision.

|

| Image source: aiperspectives.com |

In this article, you'll learn the following topics

What is AI?

AI is the development of computer systems that simulate human-like intelligence, including reasoning, perception, learning, decision-making, and problem-solving. It enables machines to analyze data, learn from it, adapt, and perform tasks that traditionally require human intervention.

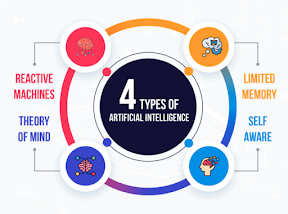

Levels of Artificial Intelligence

1. Reactive AI: This type of AI operates solely based on the current input without any memory or past experiences. It reacts to specific situations but lacks the ability to learn or form memories. Examples include game-playing AI, expert systems, and certain chatbots.

2. Limited Memory AI: This type of AI can make use of past experiences or information to make informed decisions. It has a limited memory capacity and can learn from previous interactions or data. Examples include autonomous vehicles that learn from driving experiences or recommendation systems that use past user preferences.

3. Theory of Mind AI: This type of AI is capable of understanding and inferring the mental states, intentions, and beliefs of others. It can comprehend emotions, desires, and thoughts, allowing for more sophisticated interaction and communication. Theory of Mind AI is still largely a concept and is an area of ongoing research.

4. Self-aware AI: This type of AI represents the hypothetical future development of machines that possess self-awareness and consciousness. It implies machines having a sense of their own existence, emotions, and consciousness similar to humans. Self-aware AI is purely speculative and not currently realized.

History of Artificial Intelligence

|

The history of AI dates back to the 1950s when the term was first coined by computer scientist John McCarthy. Since then, there have been several significant breakthroughs in AI research, including the development of Rule-based systems, Machine Learning, Deep Learning, Natural Language Processing, and Cognitive Computing.

Goals of Artificial Intelligence

The primary goals of AI are to create machines that can reason, learn, and understand natural language, and develop intelligent agents that can perform tasks on behalf of humans.

Subfields of Artificial Intelligence

AI has several subfields, including

Machine learning: Machine learning is a subfield of artificial intelligence (AI) that focuses on building algorithms and models that enable computers to learn from data and improve their performance on a task without being explicitly programmed. It is a type of statistical learning that involves training a machine learning model on a dataset and using it to make predictions or decisions on new data.

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

In supervised learning, the algorithm is trained on labeled data, meaning that the correct output or prediction is already known for each input. The algorithm learns to generalize from the training data and can then make predictions on new, unseen data. Examples of supervised learning include image classification, speech recognition, and fraud detection.

Unsupervised learning, on the other hand, involves training a model on unlabeled data, where the algorithm tries to find patterns or structure in the data without any prior knowledge of the correct output or prediction. This type of learning is often used for tasks such as clustering, anomaly detection, and dimensionality reduction.

Reinforcement learning is a type of machine learning that involves an agent interacting with an environment and learning through trial and error to maximize a reward signal. The agent takes actions in the environment and receives feedback in the form of rewards or punishments, which it uses to learn a policy or strategy for maximizing the reward signal.

Machine learning has many practical applications in areas such as image and speech recognition, natural language processing, recommendation systems, predictive modeling, and autonomous vehicles. It has also been used in scientific research, such as in drug discovery and genomics.

While machine learning has the potential to transform many industries and improve our lives in numerous ways, there are also concerns about the ethical and social implications of its use. These include issues related to bias, privacy, and transparency, as well as the potential displacement of jobs due to automation. It will be important for developers and policymakers to address these concerns and ensure that machine learning is developed and deployed in a responsible and ethical manner.

Deep learning: Deep learning is a subset of machine learning that focuses on training artificial neural networks with multiple layers, also known as deep neural networks. These networks are designed to simulate the complex structure and functionality of the human brain, enabling them to learn and make intelligent decisions from vast amounts of data.

Deep learning models consist of interconnected layers of artificial neurons called nodes. Each node receives input from the previous layer, performs calculations, and passes the output to the next layer. Through a process called forward propagation, the network learns to extract features and patterns from the input data.

The key advantage of deep learning is its ability to automatically learn hierarchical representations of data. By progressively extracting higher-level features from lower-level ones, deep learning models can handle complex tasks such as image and speech recognition, natural language processing, and even autonomous driving.

Training deep learning models typically involves a large labeled dataset and iterative optimization algorithms, such as backpropagation, to adjust the network's weights and biases. The process requires significant computational power, which is often accelerated using specialized hardware like Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs).

Deep learning has revolutionized various fields, including computer vision, natural language processing, and healthcare. Its impressive performance on tasks with large amounts of data and complex patterns has made it a powerful tool for solving real-world problems and driving advancements in artificial intelligence.

Natural Language Processing: Natural Language Processing (NLP) is a subfield of artificial intelligence that focuses on the interaction between computers and human language. It involves the development of algorithms and models to enable computers to understand, interpret, and generate human language in a way that is both meaningful and useful.

NLP encompasses a wide range of tasks and applications, including:

|

| Fig. Grammarly detects the Tone of Text |

1. Text Classification: Categorizing text documents into predefined classes or categories, such as sentiment analysis (determining the sentiment expressed in a text as positive, negative, or neutral).

2. Named Entity Recognition (NER): Identifying and extracting named entities from text, such as names of people, organizations, locations, dates, etc.

3. Information Extraction: Automatically extracting structured information from unstructured text, such as extracting relationships between entities or identifying key facts from news articles.

4. Machine Translation: Translating text from one language to another, such as Google Translator.

5. Question Answering: Systems that can understand questions posed in natural language and provide accurate answers, like chatbots or voice assistants.

6. Sentiment Analysis: Determining the sentiment or opinion expressed in a given text, often used for analyzing customer reviews or social media sentiment.

7. Text Generation: Generating human-like text, including chatbots, language models, or even automated content generation.

NLP techniques involve various approaches, including rule-based methods, statistical models, and more recently, deep learning models such as Recurrent Neural Networks (RNNs) and transformer models like the BERT (Bidirectional Encoder Representations from Transformers) model.

NLP plays a crucial role in many applications and industries, including customer service, content analysis, information retrieval, virtual assistants, and language translation. It continues to advance, enabling computers to understand and interact with human language more effectively.

Computer vision: Computer vision is a field of artificial intelligence that focuses on enabling computers to understand, interpret, and analyze visual information from digital images or videos. It aims to replicate and enhance human vision capabilities by extracting meaningful insights and making intelligent decisions based on visual data.

Computer vision algorithms and models are designed to perform various tasks, including:

1. Object Detection and Recognition: Identifying and localizing objects within images or videos, and labeling them with specific classes or categories.

2. Image Classification: Assigning a label or category to an image based on its content. For example, distinguishing between different types of animals or recognizing handwritten digits.

3. Image Segmentation: Dividing an image into meaningful segments or regions to better understand the structure and boundaries of objects within the image.

4. Facial Recognition: Identifying and verifying individuals based on their facial features, often used in security systems or personal authentication applications.

5. Scene Understanding: Interpreting the overall context and content of a scene, including object relationships, spatial layout, and scene categorization.

6. Image Generation: Creating new images based on learned patterns and styles, such as generating realistic images from textual descriptions.

Computer vision techniques rely on various approaches, including traditional methods like image processing, feature extraction, and pattern recognition, as well as advanced deep learning models such as Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs).

Applications of computer vision can be found in a wide range of industries, including autonomous vehicles, surveillance systems, medical imaging, augmented reality, robotics, quality control, and many others. It continues to advance rapidly, enabling machines to extract rich visual information and make intelligent decisions based on what they see.

Types of Artificial Intelligence

There are generally three types of AI:

1. Narrow or Weak AI: This type of AI is designed to perform specific tasks and has a limited scope. It excels in a defined area, such as voice recognition or image classification, but lacks the ability to generalize beyond its specific domain.

2. General or Strong AI: General AI refers to systems that possess human-like intelligence and can understand, learn, and apply knowledge across various domains. These systems can perform tasks that typically require human intelligence and have a broad range of capabilities.

3. Artificial Superintelligence: This hypothetical form of AI surpasses human intelligence and capabilities in almost every aspect. It can outperform humans in virtually all cognitive tasks and has the potential to greatly impact society and shape the future.

It's important to note that while narrow AI is the prevalent form of AI in use today, general AI and artificial superintelligence remain largely theoretical and are the subjects of ongoing research and speculation.

AI Vs Machine Learning

AI and machine learning are often used interchangeably, but they are not the same. Machine learning is a subset of AI that focuses on building algorithms that can learn from data, while AI is a broader field that encompasses machine learning and other techniques.

Where is AI used?

AI is being used in many industries and applications, including:

Healthcare: AI is used to diagnose diseases, analyze medical images, and develop personalized treatment plans.

Finance: AI is used to detect fraud, predict market trends, and make investment decisions.

Transportation: AI is used to develop autonomous vehicles, optimize logistics, and improve traffic flow.

Retail: AI is used to personalize customer experiences, optimize inventory management, and detect fraud.

Healthcare: AI is used to diagnose diseases, analyze medical images, and develop personalized treatment plans.

Finance: AI is used to detect fraud, predict market trends, and make investment decisions.

Transportation: AI is used to develop autonomous vehicles, optimize logistics, and improve traffic flow.

Retail: AI is used to personalize customer experiences, optimize inventory management, and detect fraud.

Why is AI booming now?

AI is booming now because of several factors, including the availability of large amounts of data, the development of powerful computing resources, and advances in machine learning and other AI techniques. Additionally, businesses are seeing the potential benefits of AI in terms of increasing efficiency, improving decision-making, and reducing costs. This has led to a surge in investment in AI research and development, as well as the deployment of AI-based solutions in various industries.

Another reason for the boom in AI is the growing popularity of cloud computing and the availability of powerful AI tools and platforms that can be accessed on demand. This has made it easier for businesses of all sizes to experiment with AI and incorporate it into their operations without the need for large upfront investments.

However, the rapid pace of development in AI has also raised concerns about the impact it will have on jobs, privacy, and security. As AI becomes more advanced and widespread, it has the potential to automate many jobs that are currently done by humans, which could lead to significant disruption in the labor market. Additionally, there are concerns about the ethical and social implications of AI, particularly in areas such as bias, transparency, and accountability.

In conclusion, AI is a rapidly evolving field that has the potential to transform many aspects of our lives. While there are challenges and concerns associated with AI, the benefits it can provide in terms of increasing efficiency, improving decision-making, and reducing costs are too significant to ignore. As AI continues to evolve and become more sophisticated, it will be important to ensure that it is developed and deployed in a responsible and ethical manner, in order to maximize its potential benefits while minimizing its risks.

Comments

Post a Comment